Author: TurboGuo

TLDR:

Modulus Labs implements verifiable AI by executing ML computations off-chain and generating ZKPs for AI inference call. This article re-examines their approach from an application perspective, analyzing in which scenarios there is an urgent need and in which scenarios the demand is weaker. Finally, two architecture types of AI application built on public blockchains are explored – horizontal and vertical. The main topics covered are as follows:

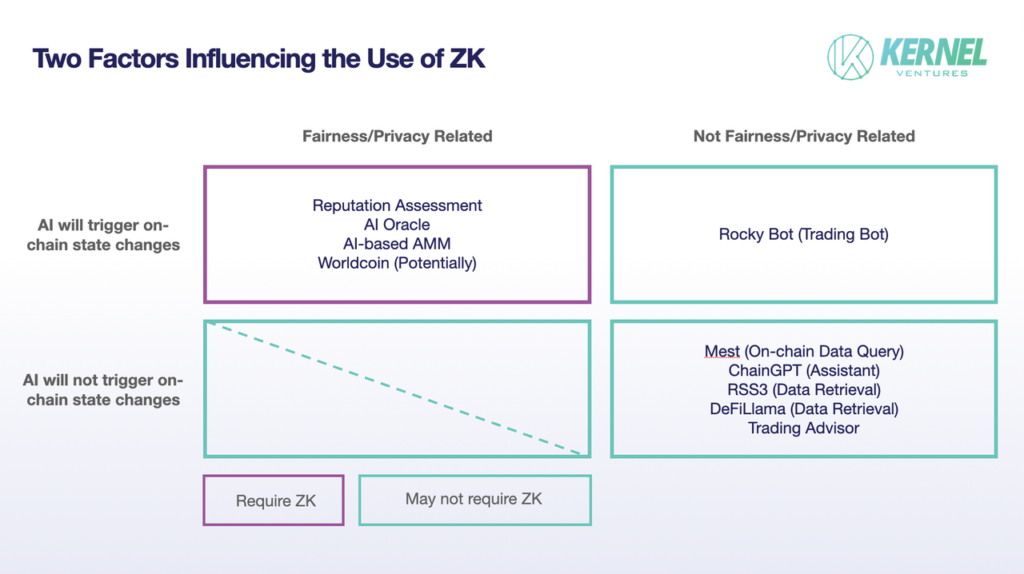

- The need for verifiable AI depends on whether AI triggers on-chain state changes and whether it involves fairness and privacy.

- When AI does not affect the on-chain state, it can serve as an advisor. People can judge the quality of AI services by their actual effects without needing to verify the computation process.

- When AI affects the on-chain state, if the service offered is personalized and does not have privacy issues, users can still directly judge the quality of AI services without examining the computation process.

- When AI outputs directly affect fairness between multiple parties and personal privacy, such as evaluating community members and allocating rewards, or involving biological data, there is a need to verify the ML computation.

- Vertical AIApplication Architecture: Since one end of verifiable AI is smart contracts, verifiable AI applications and even AI and native dApps may be able to interact with each other in a trustless way. This is a potentially interoperable AI application ecosystem.

- Horizontal AI Application Architecture: The public chain system can handle payment, dispute coordination, matching of user needs and service content for AI service providers, allowing users to have decentralized AI services with greater freedom.

Part 1: Introduction to Modulus Labs and Application Examples

1.1 Introduction and Core Solutions

Modulus Labs is an “on-chain” AI company that believes AI can significantly enhance the capabilities of smart contracts and make Web3 applications more powerful. However, there is conflict when applying AI to Web3: AI requires a lot of computing power, while off-chain AI is a black box, which does not concur to the ideology of decentralization and verifiability of Web3.

Therefore, Modulus Labs takes on the approach of ZK-rollups and proposes an architecture for verifiable AI: ML models ran off-chain where ZKPs are generated off-chain for the ML computation. These ZKPs can be used to verify the model architecture, weights and inputs off-chain; as well as published on-chain for verification by smart contracts. By achieving verifiability, AI can now interact with on-chain contracts in a more trustless way.

Based on the idea of verifiable AI, Modulus Labs has launched three “on-chain AI” applications so far and proposed many potential application scenarios.

1.2 Application Examples

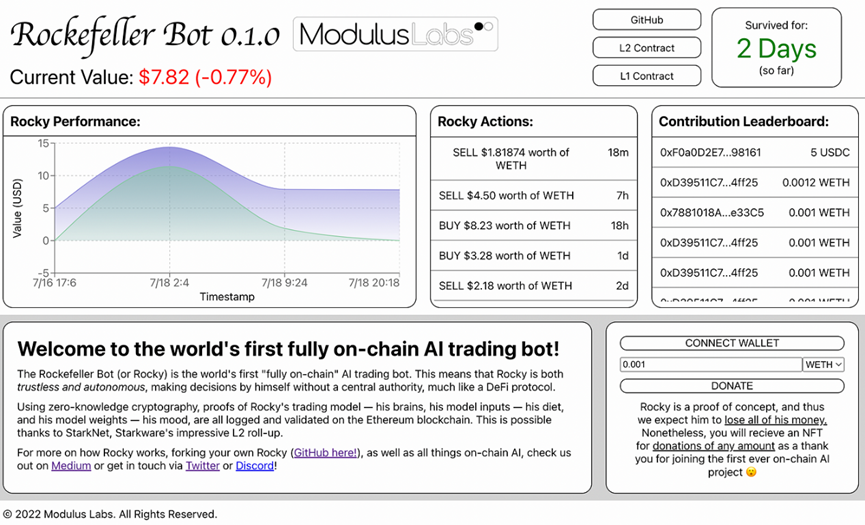

The first one is an automated trading AI“Rocky bot“ . Rocky is trained on historical data from the WETH/USDC trading pair. It predicts future trends of WETH based on historical data and makes trading decisions. It then generates a ZKP for the decision process (computation process) and sends the information to L1 contracts to trigger transaction.

The second one is an on-chain chess game “Leela vs the World”. The game players are AI and humans, and the chess game status is stored in a contract. Players make moves by interacting with the contract via their wallet. AI reads the new game status, makes judgments, and generates a ZKP for the entire computation process. These steps are completed in the AWS cloud, while the ZKP is verified by an on-chain contract. If verification succeeds, the chess game contract is called to “make a move”.

The third one is an on-chain AI artist who “launched” the zkMon NFT series. The key is that AI generates NFTs and publishes them on-chain, while also generating a ZKP. Users can use the ZKP to verify that their NFT was generated from the corresponding AI model.

In addition, Modulus Labs mentioned some other use cases:

- Use AI to evaluate personal on-chain data and other information to generate personal reputation ratings and publish ZKPs for users to verify.

- Use AI to optimize AMM performance and publish ZKPs for users to verify

- Use verifiable AI to help privacy projects respond to regulatory pressures while not exposing privacy (maybe using ML to prove that the transaction is not associated with money laundering while not revealing the user’s address and other information).

- AI oracle, while publishing ZKPs for everyone to verify off-chain data reliability.

- AI model competition, competitors submit their own architectures and weights, run models with uniform test inputs, generate ZKPs for the computation, and finally the contract will automatically send rewards to the winner.

- Worldcoin claimed that in the future, maybe users can download a model on their local device that generates a corresponding iris code from the human iris. The model runs locally and generates a ZKP. Thus, the on-chain contract can use the ZKP to verify that the user’s iris code was generated from the correct model with reasonable iris data, while keeping the biometric information on the user’s own device.

Source:Modulus Labs

1.3 Discussion of Different Use Cases Based on the Need for Verifiable AI

1.3.1 Use cases that may not require verifiable AI

In Rocky Bot’s scenario, users may not need to verify the ML computation process.

First, users lack professional knowledge and are completely unable to perform real verification. Even with verification tools, users will only see that they pressed a button and a pop-up told them the AI service was indeed generated by a certain model. They don’t know if it’s true behind the scenes.

Second, users have no need to verify because they value the ROI of the AI. When ROI is low, users will migrate, as they will always choose the model with the best results. In short, when users care about the final outcome of the AI, verifying the process may be meaningless because users only need to migrate to the service with the best results.

One possible solution is for AI to only act as an advisor, while users execute trades independently. After users input their trading goals to AI, it calculates off-chain and returns the best trading path/direction to users who don’t need to verify the underlying model since they just need to choose the product with the highest returns.

A potentially dangerous yet highly possible situation arises when individuals show complete disregard for custody of their own assets and do not value the AI computation process. When an automated money making robot appears, people may be willing to put money in it, just like depositing tokens into a CEX or traditional bank for wealth management. Because people don’t care about the mechanism behind it, they only care about how much profit they receive in the end,or even just how much profit the project claims they have earned. This kind of service may also quickly gain a large number of users, and even iterate faster than projects using verifiable AI.

If AI is not involved in triggering on-chain state changes at all, and only fetches on-chain data and preprocesses it for users, then there is also no need to generate ZKPs for computation. We can call this type of application as “data service”, here are some examples:

- The chatbox provided by Mest is a typical data service. Users can inquire about their on-chain data through QA, such as asking how much they have spent on NFTs.

- ChainGPT is a multi-functional AI assistant. It can interpret smart contracts for you before trading, revealing whether you are trading in the right pool, and whether the transaction is prone to front running. ChainGPT also plans to offer services like AI news recommendations and publishing AIGC NFTs.

- RSS3 provides AIOP, allowing users to choose what on-chain data they require and perform certain preprocessing, making it easier to take specific on-chain data to train AI.

- DefiLlama and RSS3 both have ChatGPT plugins so users can obtain on-chain data through conversation.

1.3.2 Use Cases Requiring Verifiable AI

This article argues that scenarios involving multiple parties‘ fairness and privacy require ZKPs for verification. We will discuss some of the applications mentioned by Modulus Labs:

- When a community distributes rewards based on AI-generated individual reputations, community members will certainly require auditing of the evaluation process, which in this case is the ML computation.

- In the scenario of AI optimizing AMM, which involves distribution of benefits between multiple parties, the AI computation process also needs to be periodically verified.

- When balancing privacy and regulation, ZK is one of the better solutions. If the application uses ML to process private data in the service, a ZKP of the ML computation is needed.

- Due to the wide reaching impact of oracle, if it is powered by AI, there is a need to generate ZKP periodically to check whether the AI is functioning properly.

- In competitions, the public and other participants need to check whether the ML algorithms comply with the competition specifications.

- In the potential use case of Worldcoin, there is a strong need to ensure that the ML model is used in a way that protects the privacy of individuals.

Overall, whether AI triggers on-chain state changes and whether it affects fairness/privacy are the two criteria for determining whether verifiable AI is needed.

When AI does not triggeron-chain state changes, AI service can act as an suggester, and people can judge the quality of AI service by the effect of the suggestion without the need to verify the computation process;

When AI does trigger on-chain state changes, and if the service is only for individuals and does not involve personal privacy, then users can still judge the quality of AI service without verifying the calculation process;

When the output of AI directly affects the fairness between different parities, and AI triggers on-chain state changes, then the community and the public should have the need to check the decision-making process of AI;

When the data processed by ML involves personal privacy, then there is a definite need for ZK to protect privacy and respond to regulatory requirements.

Source:Kernel Ventures

Part 2: Two Type of AI Application Architecture Based on Public Blockchains

In any case, the solution proposed by Modulus Labs is a great inspiration for how AI can be combined with blockchain and generate practical application value. However, the public blockchain not only enhances the ability of a single AI service, but also has the potential to build a new ecosystem of AI applications. This emerging ecosystem introduces a distinct paradigm for interactions among AI services, relationships between AI services and users, as well as fosters the collaborative dynamics between upstream and downstream entities. We can categorize this AI application ecosystem into two overarching types: vertical and horizontal architectures.

2.1 Vertical Architecture: Focusing on Composable AI

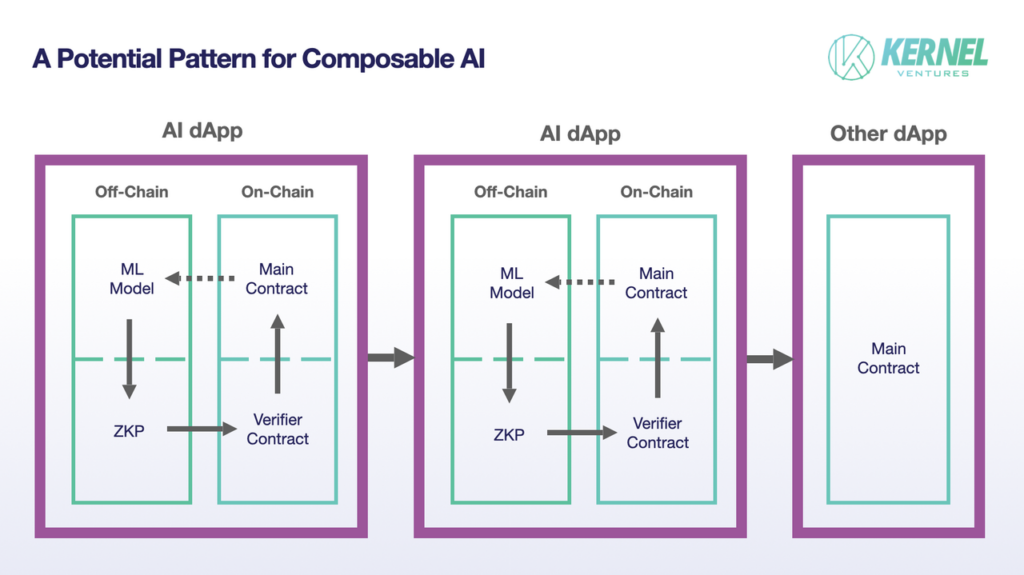

The “Leela vs the World” online chess game has an unique aspect where people can place bets on the human player or the AI, with tokens being automatically distributed after the game based on the outcomes. Here the purpose of ZKP is not just for users to verify AI’s calculations, but also serves as a trust guarantee to trigger on-chain state changes. With this trust guarantee, composability between AI services, and between AI and native crypto dApps becomes possible at a dApp level.

Source:Kernel Ventures(based on the model from Modulus Labs)

The basic unit of composable AI is [off-chain ML model – ZKP generation – on-chain verification contract – main contract], gathering from the framework of “Leela vs the World”, although the actual architecture of individual AI dApps may differ from the diagram.

First, chess games require a contract to track game state, but in reality AI may not need an on-chain contract. However, for the architecture of composable AI, if the main activity happens in a contract, it may be more convenient for other dApps to integrate with it.

Second, the main contract does not necessarily need to affect its ML model. The ML model of an AI dApp only need to trigger a contract related to its own action, and that contract is then called by other dApps.

Contract calls between dApps enable composability between different Web3 applications, including identity, asset management, financial services, and even social information. We can envision a specific combination of AI applications:

- Worldcoin uses ML to generate iris code for individual iris data and generate a ZKP.

- A reputation scoring AI app first verifies that the user is a real person (backed by the iris data), then assigns an NFT based on on-chain reputation

- A lending service adjusts loan amount based on the NFTs held by the user

- …

While AI interactions on public blockchain is not something nouveau, Realms ecosystem contributor Loaf has proposed that AI NPCs could trade with players and each other, allowing the entire economic system to self-optimize and operate automatically. AI Arena developed a game where AIs automatically battle each other. Users first purchase an NFT representing a battle robot, backed by an AI model. Users play the game themselves, provide data for AI to learn from, and when ready, deploy it to battle other AIs. Modulus Labs mentioned AI Arena wants to make these AIs verifiable. In both cases, we see the potential for AIs to interact and directly change the on-chain state.

However, many details around implementing composable AI in practice remain to be fleshed out, such as how different dApps can leverage each other’s ZKPs and verifier contracts. But with the amount of innovation happening in the zero knowledge space, for example RISC Zero’s work on complex off-chain computations and generating ZKPs for on-chain verification, appropriate solutions may soon emerge.

2.2 Horizontal Architecture: Focusing on Decentralized AI Platforms

Here we introduce a decentralized AI platform called SAKSHI, proposed by researchers from Princeton, Tsinghua University, University of Illinois Urbana-Champaign, HKUST, Witness Chain and Eigen Layer. Its core objective is to enable users to obtain AI services in a more decentralized manner, making the entire process more trustless and automated.

Source:SAKSHI

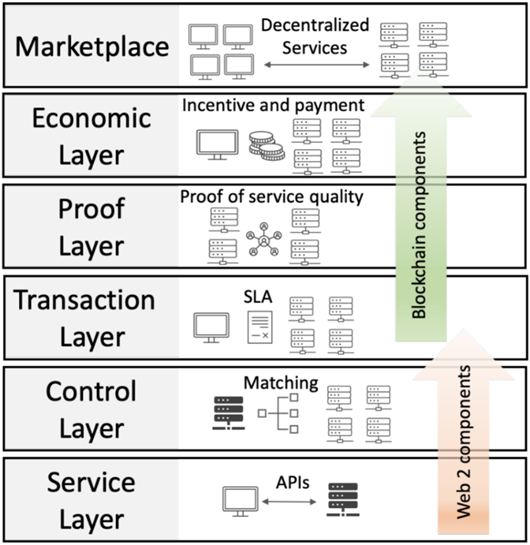

SAKSHI consists of six layers: Service Layer, Control Layer, Transaction Layer, Proof Layer, Economic Layer and Marketplace.

The Marketplace is the layer closest to users. Users place orders through aggregators and sign agreement on service quality and pricing (SLA – service level agreement).

The Service Layer then provides an API to the client, which sends an ML inference request to the aggregator. This request is passed to a matching supplier server using a router deployed as a part of the control layer. So the Service and Control Layers resemble a Web2 service with multiple servers, but each server is operated by a different entity, associated with the aggregator through SLAs signed earlier.

SLAs are deployed on-chain as smart contracts, belonging to the Transaction Layer (In SAKSHI, it is referred to as the Witness Chain). The Transaction Layer also records the current state for each service order, and is used to coordinate users, aggregators and service providers, handling payment disputes.

To provide a basis for resolving disputes, the Proof Layer verifies if service providers adhere to the SLA by examining the models used. However, instead of generating ZKPs for ML computations, SAKSHI proposes an optimistic approach – establishing challenger nodes to audit services, incentivized by Witness Chain.

Although SLAs and the challenger network are on the Witness Chain, SAKSHI’s proposal does not plan to use Witness Chain’s native token for incentives to achieve independent security. Instead, it leverages Ethereum’s security through Eigen Layer. So, the entire Economic Layer basically relies on Eigen Layer.

We can see SAKSHI acting as an intermediary between AI providers and users, bringing different AIs together in a decentralized manner to serve its users. This is more of a horizontal approach. SAKSHI’s focus is on freeing AI providers to just manage their off-chain model computations, while matching user needs to model services, handling payments, and verifying service quality through on-chain protocols in a more automated way. Of course, as SAKSHI is still theoretical, numerous implementation details remain to be fleshed out.

Part 3: Future Outlook

Whether composable AI or decentralized AI platforms, blockchain-based AI ecosystem patterns seem to have commonalities – AI providers can interact directly with users and just supply ML models for off-chain computation. Payments, dispute resolution, matching user needs with services, can be handled by trustless protocols. As a trustless base layer, blockchains reduce friction between providers and users, giving users greater autonomy.

While the advantages of using blockchains as a base layer are well-understood, they are indeed suitable for AI services. However different to dApps, AI application computations cannot all be on-chain, so ZK or optimistic approach is needed to integrate AI services in a more trustless manner.

With improvements like account abstraction to allow better user experience, elements like private key management, chains and gas may not be noticed. With a smooth experience resembling Web2 UX, a much greater degree of freedom and composability, providing strong incentives for mass adoption, blockchain-based AI application ecosystems have an exciting future ahead.

REFERENCE

- Chapter 1: How to Put Your AI On-Chain:https://medium.com/coinmonks/chapter-1-how-to-put-your-ai-on-chain-8af2db013c6b

- Chapter 4: Blockchains that Self-Improve:https://medium.com/@ModulusLabs/chapter-4-blockchains-that-self-improve-e9716c041f36

- Chapter 6: The World’s 1st On-Chain AI Game:https://medium.com/@ModulusLabs/chapter-6-leela-vs-the-world-the-worlds-1st-on-chain-ai-game-17ea299a06b6

- AN INTRODUCTION TO ZERO-KNOWLEDGE MACHINE LEARNING (ZKML):https://worldcoin.org/blog/engineering/intro-to-zkml#zkml-use-cases

- Zero-Knowledge Proof: Applications and Use Cases:https://blog.chain.link/zero-knowledge-proof-use-cases/

- SAKSHI: Decentralized AI Platforms:https://arxiv.org/pdf/2307.16562.pdf

- Honey, I Shrunk the Proof: Enabling on-chain verification for RISC Zero & Bonsai:https://www.risczero.com/news/on-chain-verification

- 对话 Nil Foundation 创始人:ZK 技术或被误用,公开可追溯并非加密初衷:https://www.techflowpost.com/article/detail_12647.html

- IOSG Weekly Brief |点亮区块链的星火:LLM 开启区块链交互的新可能 #187 https://mp.weixin.qq.com/s/sVIBF6iPXwhamlKEvjH19Q